Run LLM inference at maximum throughput

This example demonstrates some techniques for running LLM inference at the highest possible throughput on Modal.

For more on other aspects of maximizing the performance of LLM inference, see our guide. For a simpler introduction to LLM serving, see this example.

As our sample application, we use an LLM to summarize thousands of filings with the U.S. federal government’s Securities and Exchange Commission (SEC), made available to the public for free in daily data dumps via the SEC’s Electronic Data Gathering, Analysis, and Retrieval System (EDGAR). We like to check out the Form 4s, which detail (legal) insider trading.

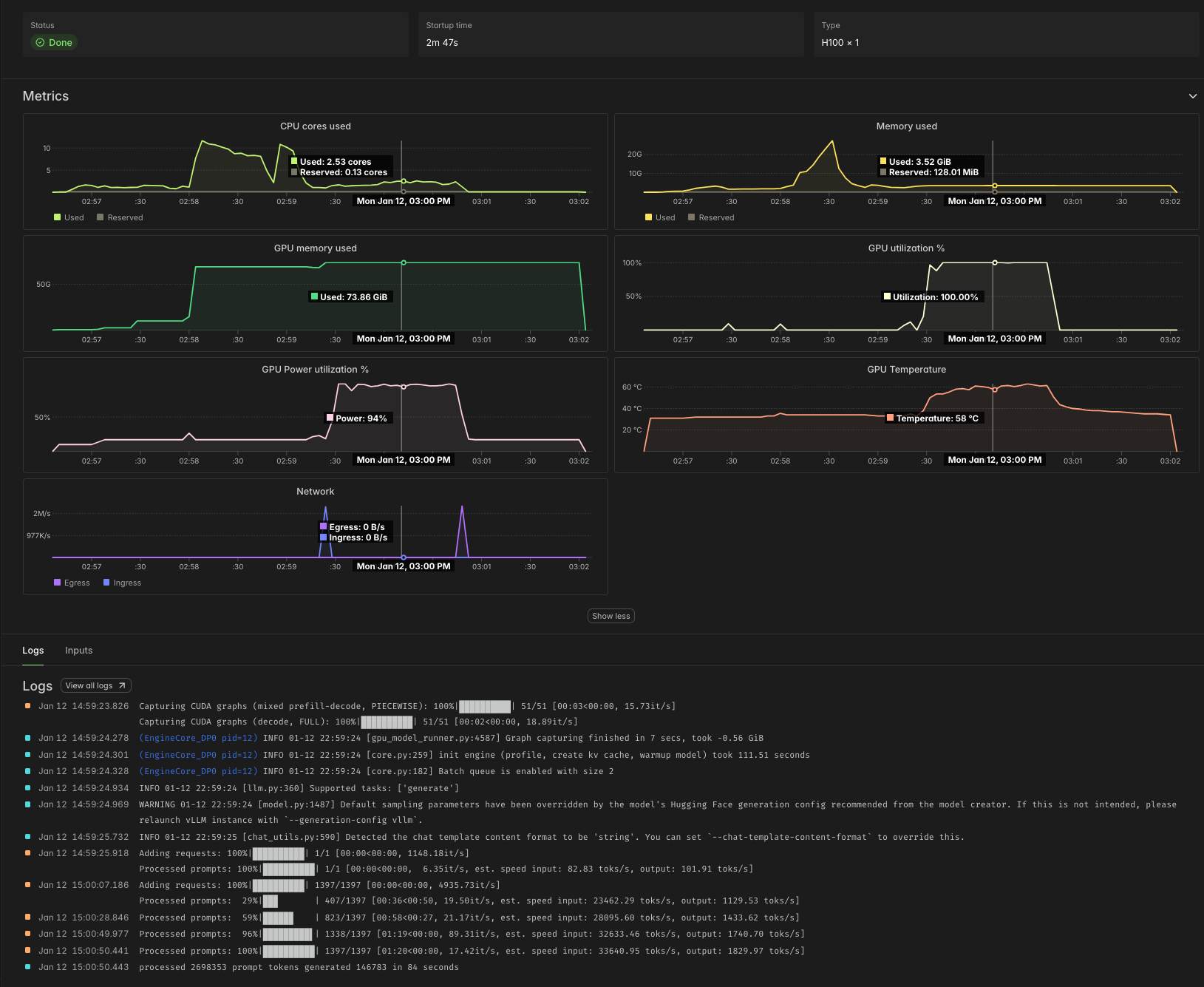

Using the Qwen 3 8B parameter LLM on this task, which has inputs that average a few thousand tokens and outputs that average a few hundred tokens, we observe processing speeds of ~30,000 input tok/s and ~2,000 output tok/s per H100 GPU, as in the sample Modal Dashboard screenshot below. Note the 100% GPU utilization, indicating the absence of host overhead, and the high GPU power utilization, further indicating we are close to the hardware’s physical limits.

At Modal’s current rates as of early 2026, that comes out to roughly 4¢ per million tokens. According to Artificial Analysis, API providers charge roughly five times as much for the same workload.

Organizing a batch job on Modal

We start by defining a Modal App, which collects together the Modal resources our batch job uses. While we’re at it, we import a bunch of the libraries we will need later.

Many batch jobs work nicely as scripts — code that is run

from a shell, ad hoc, rather than deployed.

For that, we define a local_entrypoint with code that runs

locally, when we pass our script to modal run,

and triggers/orchestrates remote execution.

We demonstrate two techniques for collecting the results of a batch job,

toggled by passing the --wait-for-results/--no-wait-for-results flag via the command line.

When we --wait-for-results, we pass the modal.FunctionCall IDs

that make up our batch job to FunctionCall.gather, which

returns once our job is done. Here, we just print the results,

but in a more realistic setting you might save them to disk.

Instead of waiting for results, we can retrieve them asynchronously

based on the FunctionCall ID — a simple string.

Results are stored in Modal for one week.

In the local_entrypoint below, these IDs are printed,

but you might store them in a file on disk, add them to your database,

or put them in a Modal Queue or Dict for later retrieval.

The meat of the work is done in our orchestrate function.

It manages the overall pipeline of execution,

starting with extracting data from the raw data source,

followed by transforming it into a cleaner format

and then processing it with the LLM.

For both extraction and transformation, we use .map,

which fans out inputs over containers in parallel.

Each invocation handles one day’s worth of data —

the same granularity offered by the data source.

For the LLM call, we use .spawn,

which triggers asynchronous execution of the LLM, immediately

returning the FunctionCall that can later be used to .get the result

(or .gather several results).

We run it as a .remote Modal Function call

so that it can keep running even after our local client disconnects

(so long as we use modal run --detach).

In that case, we dump the FunctionCall IDs into the logs,

but you might also write them to an external store for later retrieval.

The app.function decorator below is all we need to set turn this Python function

into a remote Modal Function!

Before going any further, we should agree on the format that our transform and llm.process functions will use to communicate

individual elements.

We’ll use a lightweight Python dataclass to represent

each SEC Filing.

For our task, we’re going to take the text of a filing and produce

a summary. So the text is mandatory and the summary starts out empty (None),

to be filled in by the LLM.

We’ll also keep a bit of metadata that should be included.

But we’re not sure all of these fields will exist (API data is messy!),

so we reserve the right to set them to None.

With the basic orchestration set up, let’s implement each component in turn.

Serving tokens at maximum throughput

First, the LLM service.

Configuring vLLM for maximum throughput

We choose the vLLM inference engine. You might alternatively use SGLang. In our experience, new models and other features are implemented first in vLLM, and vLLM has a small edge in throughput over SGLang, but either can work well.

vLLM will automatically download the model for us and produce some compilation artifacts, all of which are saved to disk. Modal Functions are serverless and disks are ephemeral, so we attach a Modal Volume to the locations where vLLM saves these files to ensure that they persist.

Like a database or web server, an LLM inference engine typically has a few knobs to twiddle to adjust performance on different workloads.

First and foremost, you need to pick the hardware it will run on. We’ll be running a smaller model in 8bit floating point format. Hopper and later GPUs have native support for this format. To maximize throughput, we want to ensure our inference is compute-bound: the bottleneck is not loading weights/KV cache from memory, it’s performing computations on those values. Roughly speaking, we want to be able to put together a batch whose size is within an order of magnitude of the ridge point arithmetic intensity of the GPU for our floating point format, which is ~600 for an H100 SXM Tensor Code on FP8 data.

A single H100 GPU has enough GPU RAM for pretty large batches of this data for this model, so we stick with one of those — and just one! Deploying onto multiple GPUs would increase throughput per replica, but not throughput per GPU and so not throughput per dollar.

The dictionary of arguments below cover the knobs we found it important to tune in this case. Specifically, we set a maximum sequence length, based on the data, to give the engine more hints about how to pack batches. We select FlashInfer as the provider of the attention kernels, which vLLM recommends for higher throughput in offline serving. Finally, we turn on the asynchronous batch scheduler, which gives a small boost to throughput.

For details on these and other arguments, we recommend checking out the vLLM docs, which include lots of recipes and recommendations for different workloads and models.

Deploying vLLM on Modal

For offline, throughput-oriented serving,

we can use the LLM interface of the vLLM SDK.

This interface processes batches of inputs synchronously,

unlike the AsyncLLM or HTTP serving interfaces.

Dumping a large batch all at once exposes

the maximum amount of parallelism to the engine

and adds the least request management overhead,

so we can expect it to maximize throughput.

Critically, though, this means we don’t get any results

until all of them are finished — a key engineering degree of freedom

for throughput-oriented offline/batch jobs!

We use a Modal Cls to control the spinup and shutdown logic for the LLM engine.

Specifically, we create it (and warm it up with a test request)

in a method decorated with modal.enter and we shut it down in a method decorated with modal.exit.

The code in these methods will run only once per replica,

when it is created and destroyed, respectively.

In between, we run a batch of Filings through the engine,

adding the model’s output text to the summary field.

And that’s it for the LLM portion of the pipeline! The remainder of this document is code and explanation for the data loading and processing steps. The details are mostly specific to this dataset, but there are a few general Modal tips and tricks for batch processing along the way.

Transforming SEC filings for batch processing

We can avoid having to deal directly with the low-level

details of the SEC’s data format by using the edgartools library.

And we can avoid worrying about compatibility with the other libraries

in our project by putting it in a separate container Image.

Instead of hitting the SEC’s EDGAR Feed API every time we want to run a job, we’ll cache the results for each day in a Modal Volume. We use Modal’s v2 Volumes, which have no limit on the number of total stored files.

Note that v2 Volumes are still in beta, so data loss may be possible. This is acceptable for most batch jobs, which extract data from an external source of truth.

The transform function below operates on a folder containing data

with one filing per file

(in NetCDF/.nc format).

Loading thousands of filings with edgartools takes tens of seconds.

We can speed it up by running in parallel on Modal instead!

But running each file in a separate container would add too much overhead.

So we group up the files into chunks of ~100 and pass those to

the Modal Function that actually does the work.

Again, we use map to transparently scale out across containers.

Because these containers are cheap to scale up and are only needed for

a brief burst during the pipeline, we set the scaledown_window for the containers

to a much lower value than the default of five minutes — here, five seconds.

Loading filings from the SEC EDGAR Feed

We complete our reverse tour of the pipeline by loading the data from the original source: the SEC EDGAR Feed, an archive of daily filings going back over three decades.

We use the requests library to pull data from the API.

We’ll be downloading large (maybe megabytes to few gigabytes)

files with low concurrency, so there’s little benefit to running an asynchronous web client.

Our concurrency is limited by the policies of the SEC EDGAR API.

The limit is 10 RPS, which we aim to stay under by setting the max number of containers running our extraction to 10.

We add retries via our Modal decorator as well, so that we can tolerate temporary outages or rate limits.

Note that we also attach the same Volume used in the transform Functions above

and we explicitly .commit our writes

so that they will be visible to future containers running transform.

Addenda

The remainder of this code consists of utility functions and boiler plate used in the main code above.

Utilities for transforming SEC Filings

The code in this section is used to transform, normalize, and otherwise munge the raw filings downloaded from the SEC.

For LLM serving, the most important piece here is the function to truncate documents. A maximum document length can be used to set a loose bound on the sequence length in the LLM engine configuration.

Utilities for loading filings from the SEC EDGAR Feed

The code in this section is used to load raw data from the Feed section of SEC EDGAR.

Daily dumps are stored in tar archives, which the code below extracts. Archives for particular days are located by searching the SEC EDGAR Feed indices for the appropriate URL.

For full compliance with SEC EDGAR etiquette,

we recommend updating the SEC_USER_AGENT environment variable

below with your name and email.